Creating and maintaining a website can be easy. Creating and maintaining a website that converts can be hard if you don’t know what to look for to improve!

Read More >>Digital Solutions for Healthcare Professionals

For the past 20 years, Solution21 has been a premiere Web Design and SEO agency for doctors and dentists. Located in sunny Southern California, this digital marketing and web design agency is packed full of marketing specialists, digital gurus, and talented UX/UI designers working together everyday to bring our customers beautiful and functional websites.

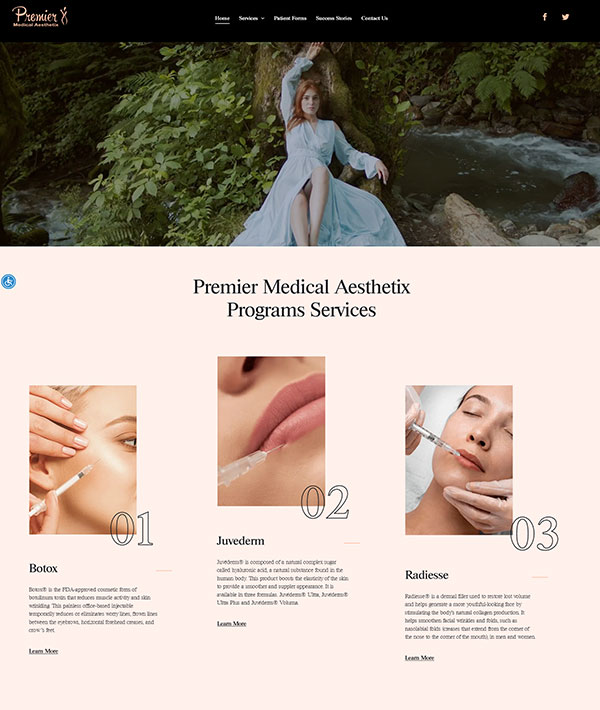

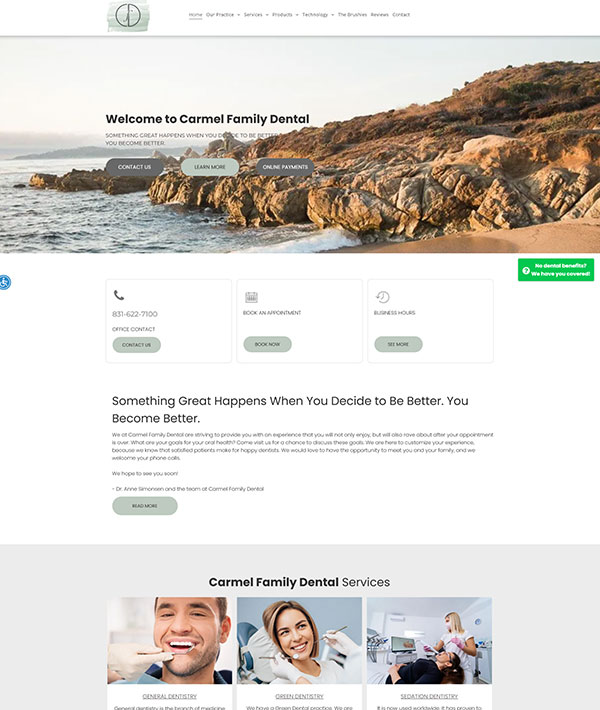

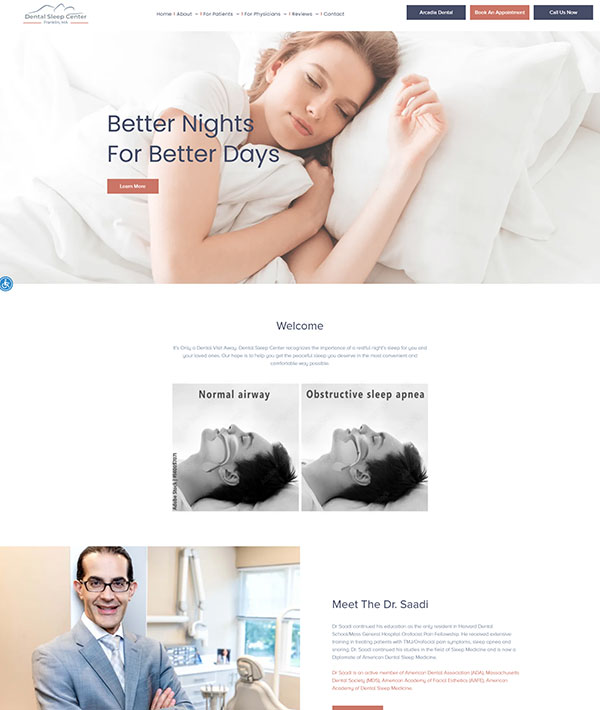

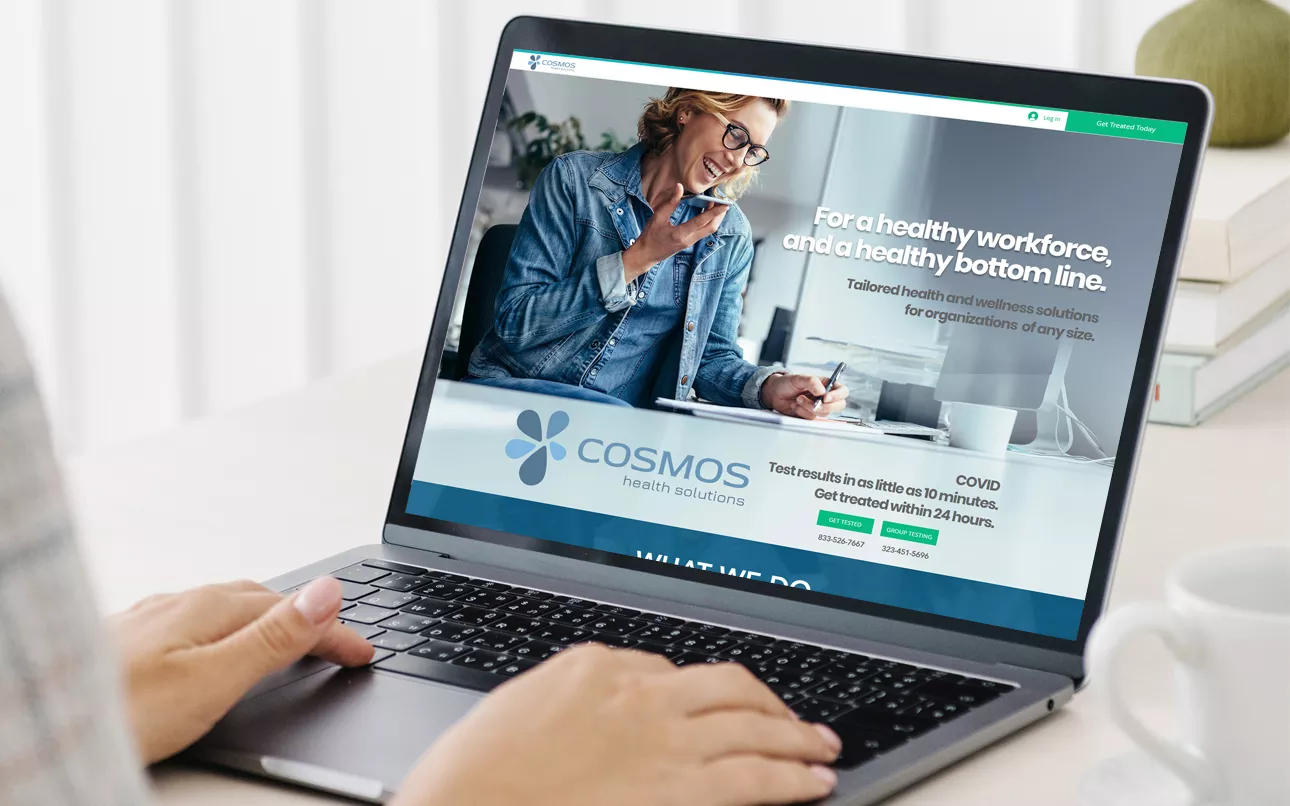

SLEEK WEB DESIGN

Our talented UX/UI team offers different website design services based on your digital needs! We offer comprehensive packages you can choose from as well as add ons to fit your needs. Our designs always offer accessibility, optimized performance, and functionality on all devices.

More InformationHEALTHCARE ENHANCEMENTS

Running a healthcare practice is hectic without the right tools and a team at your disposal! In order to run your practice smoothly, you need some help with the use of a variety of digital tools! Solution21 offers ADA compliance, HIPPA compliant forms, online appointment management, reputation management, call tracking and more.

More InformationDIGITAL MARKETING SOLUTIONS

We offer digital marketing services tailored to fit your dental or medical practice. Choose an agency that will assist you in building your marketing strategy and attaining your business goals. Choose from a wide range of services such as social media marketing, email marketing, content marketing, search engine optimization services, PPC, and more!

More InformationInternet Marketing

Search engine optimization, paid advertisement management for increased visibility and profitability.

Website Design

Cutting-edge Website Design & Landing pages that converts visitors into patients.

Brand Reputation

Making it easy for you to collect patients reviews, while retaining complete control over the review!

Social Media Marketing

Increasing Brand Connect with your existing & potential customers to drive engagement and business growth.

Customer Experience

Tools like appointment scheduler, online payments, telemedicine for the enhanced customer experience.

Content Marketing

Blog posts, media releases and partnerships for targeted demographics to fuel business growth.

Why Choose Solution21 ?

After choosing Solution21 as your Web Design Internet Marketing Company to build your new website, our marketing professionals will create a high quality website that can generate a tremendous number of leads, maximize your revenue, and improve the user experience.

Solution21 has many web design Internet marketing tools in its arsenal. Such as search engine optimization, paid advertisement management, social media management to profiles for increased profitability and reputation management to protect your brand. Additionally, our experts can create a mobile application that provides useful features, an innovative dashboard, and a customizable scheduler.

We specialize in serving the healthcare community, including doctors and dentists. If you are not a medical professional, please visit our sister website, "Web Concepts Media (WCM)" dedicated to providing services to everyone else.

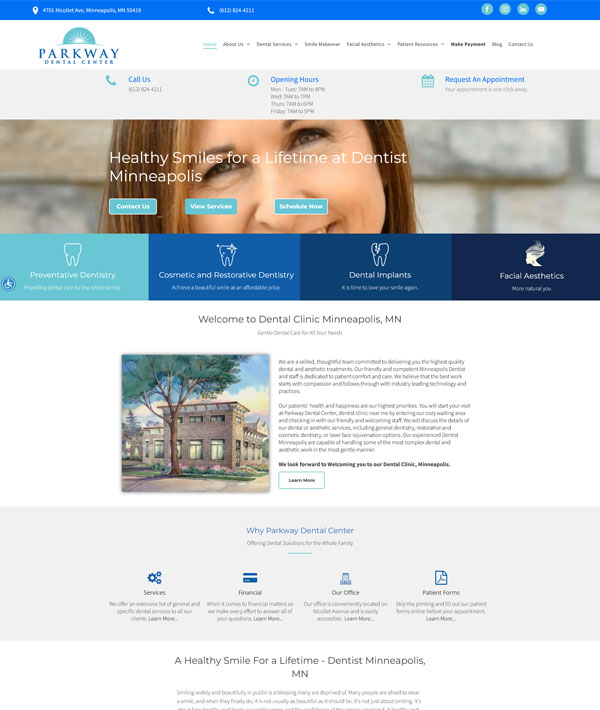

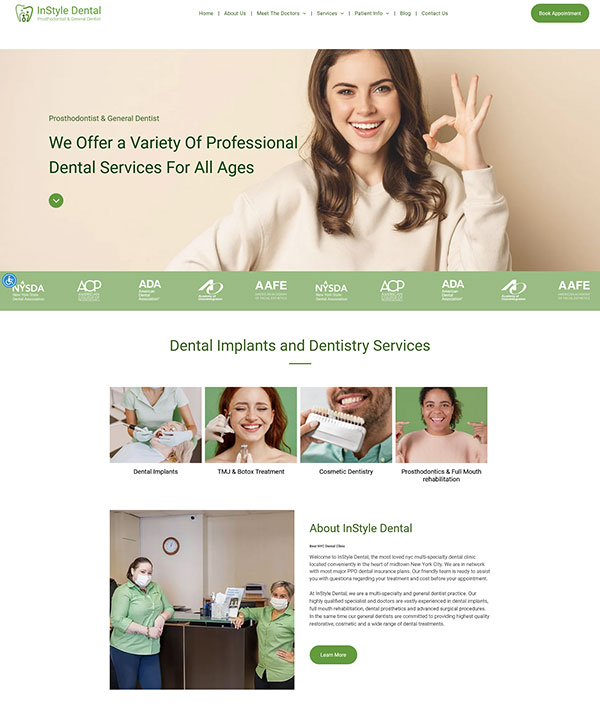

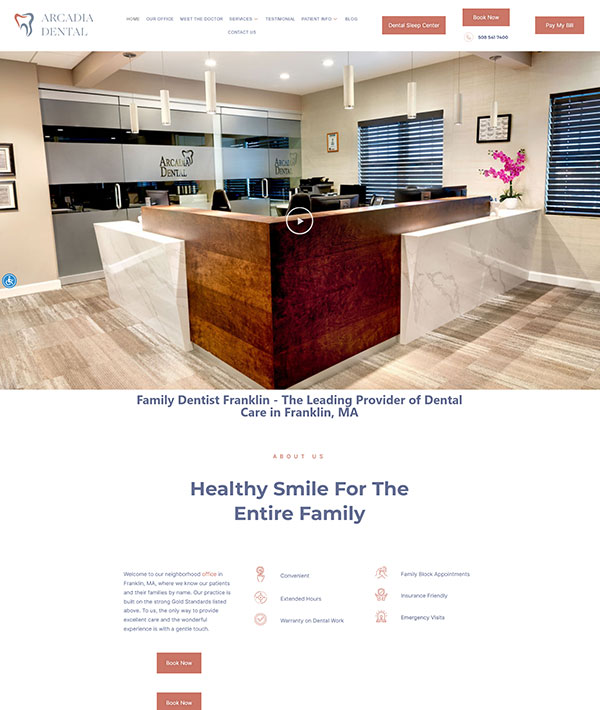

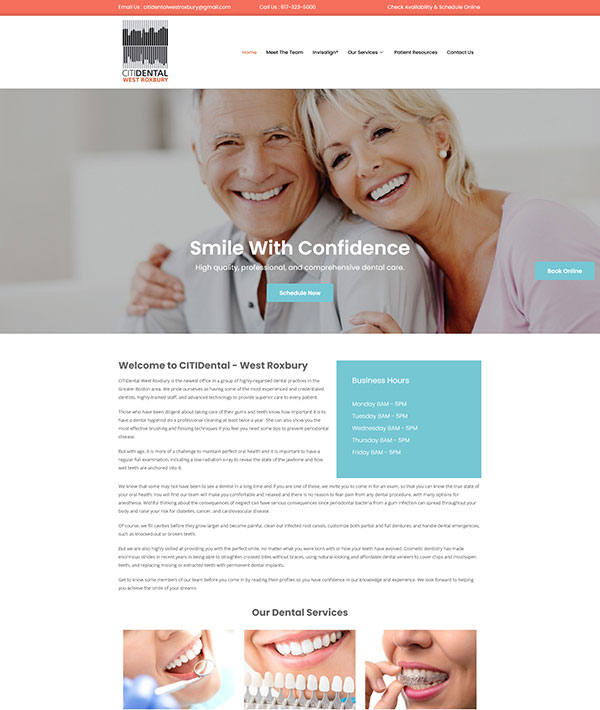

Visit Web Concepts MediaCompleted Projects

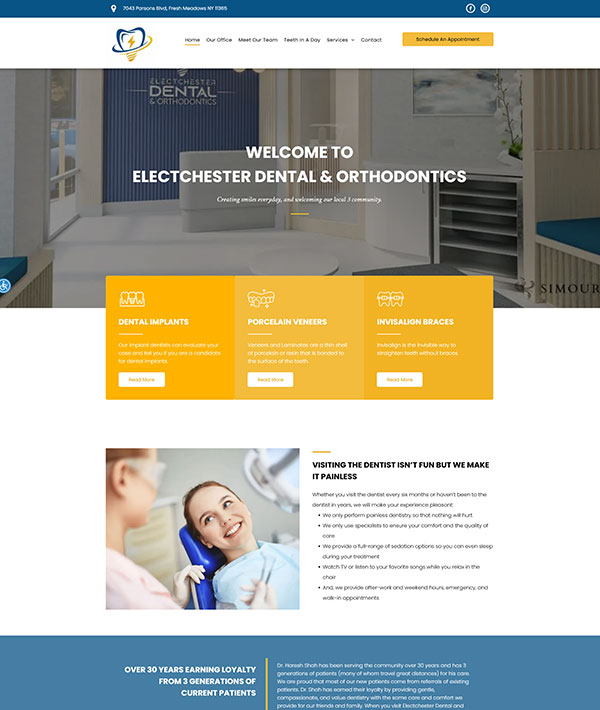

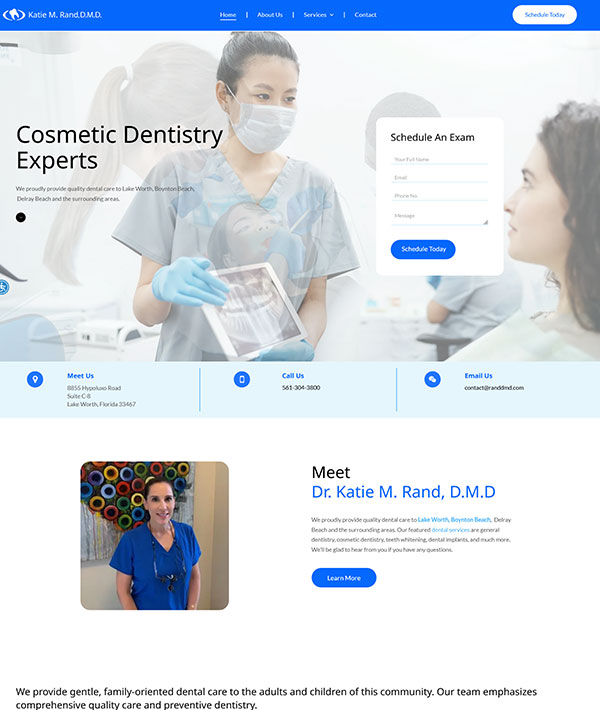

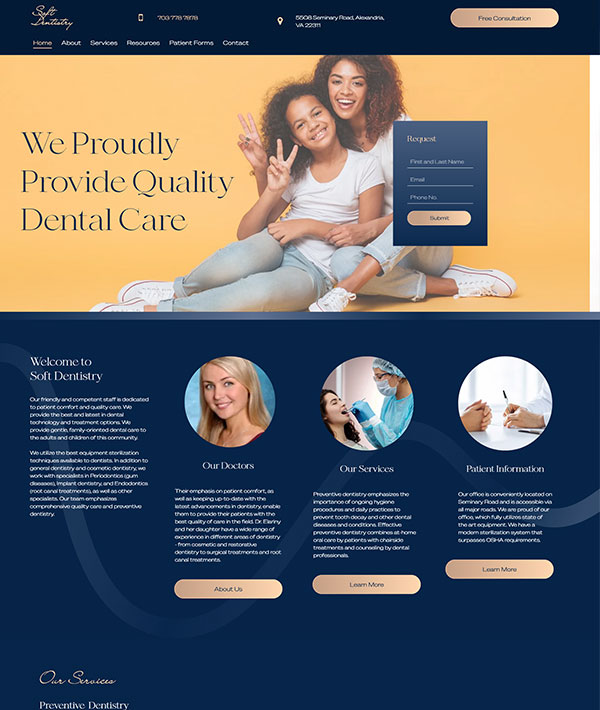

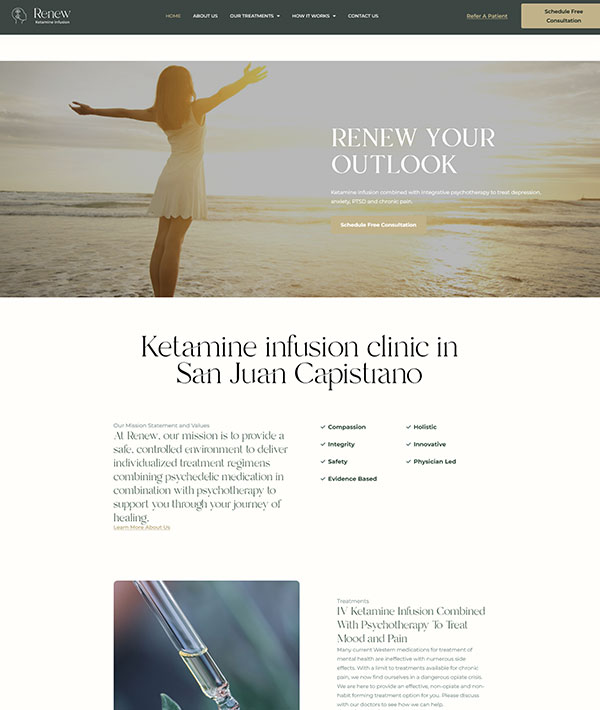

We've Done A Lot Of Work , Mind Looking At A Few Of Our Best?

Latest Blog Posts

If you have a business, chances are you are trying to get an established online presence.

Read More >>

Today, more patients are searching online for healthcare information. Through the internet, people have access to a wealth of information.

Read More >>